Five Key Factors in Architecting a Master Data Solution

Originally published on Hub Designs Magazine May 2012

Over the last few years, Master Data has been recognized as one of the most important types of business information to be managed. Organizations are heading in the right direction by implementing Master Data Management (MDM) systems to take control of critical data like customers, products, employees, suppliers, materials, locations, etc.

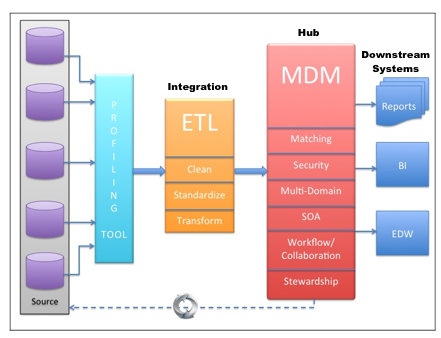

There are different architecture and styles that an organization can adopt while building its MDM solution. Gartner defines Consolidated, Registry, Coexistence and Transaction hubs as the top implementation styles. The hub-and-spoke architecture is a very common approach and provides a single consolidated repository of different master data entities. The main advantage of this architecture is its ability to provide an enterprise-wide, complete and accurate view (often referred as the “360 Degree View” or “Single View of the Truth”) for various master data domains.

Implementing MDM requires in-depth changes to the way organizations work, partly because the technologies adopted here are fairly new, and mainly due to the cultural challenges MDM poses to most organizations. When a company is implementing an MDM hub, by definition, it is building a system that will have a footprint across all the departments and lines of businesses in the organization.

Statistics show that MDM implementations either take a long time to complete or in some cases, yield less than the expected return. While the key reasons are the failure to assess data quality and to establish data governance bodies, maturity of the organizations can also be a significant issue.

Over the past 8 years of providing MDM consultation across different industries, one other aspect I keep stumbling upon is the absence of teams having an end-to-end vision. An important role during MDM inception is that of solution architect – the one who should have the complete picture of the solution involving applications and technologies for profiling, data cleansing, consolidation, enrichment, de-duplication and synchronization which are key ingredients of the MDM recipe.

More than anything else, the solution architect will know the complete information system landscape, what integration mechanisms should be applied, and can estimate the hardware and infrastructure requirements based on the volume of data. Technical constraints and lack of expertise are common pitfalls and knowing about those is a key aspect.

One can’t build a house without a blueprint. The same holds true for MDM implementations. Setting the right foundation and architecting the solution while keeping long-term goals in mind is crucial.

The next section earmarks the five key factors that a solution architect should look into during MDM hub design. A carefully designed architecture covering each of these points helps create a sustainable and scalable solution. This is also a balanced, holistic approach that accelerates the implementation, thus helping to realize MDM benefits more rapidly.

- Data profiling to understand the current state of data quality

- Data integration mechanisms to consolidate the data

- Designing an extensible master data repository

- Robust data matching & survivorship functionality

- Seamless synchronization of master data

We’ll elaborate each of these factors and note some of the key takeaways that help to architect a robust MDM solution.

Data profiling to understand current state of data quality

Data profiling to understand current state of data quality

The reason most often cited for implementing MDM is to reduce dirty data in the organization. Cleansing data is a difficult, repetitive and cumbersome process. The politics and the viral nature of data and the silos that exist in the organization can all add to the muddle to make it even more challenging.

Given that, we need someone who can tell us how much of the data is dirty in first place. Data profiling tools do that for you. Not only does data profiling provide extremely useful information about the quality of data, it will also help in discovering the underlying characteristics and discrepancies associated with data.

Position the data profiling tool at the forefront of your MDM solution. The tool you use should be able to generate easy-to-interpret reports for pre- and post- data cleansing activities. As an architect, one has to put in place a well-designed, easy-to-use and effective data profiling tool.

The tool should be placed relative to both initial data migration as well as ongoing data integration tasks. For example, data profiling can provide useful insights about data coming from a specific source at the beginning of the project. The generated report may include information such as the percentage of customers whose birth date is defaulted to 01-01-1901, or Tax Id’s set as 111-111-1111. These errors need to be fixed either in the source or on the way into the MDM repository.

Although profiling is a key aspect, don’t let this become a major hindrance to the overall architecture. So be cautious about the time you take here. The biggest benefit of profiling comes in the form of providing accurate estimates for the data integration effort, which is the next part of our solution.

Data integration mechanisms to consolidate the data

While data profiling helps us determine the rules required, data integration tools actually transform the data. They help in applying numerous rules so that an intermediate clean state of data can be reached.

Historically, data integration tools have been helping business to efficiently and effectively gather, transform and load data during mergers and acquisitions, or during the streamlining of different departments. When it comes to MDM implementations, these tools play a crucial role as they can pretty much “make or break” the establishment of an MDM hub.

A well-architected, sustainable and fast batch ETL solution may be appropriate for integrating the source and target systems with a Master Data hub. Careful consideration should be given to the timely availability of the data by designing the optimal number of staging areas. The architect should know exactly what goes through batch ETL versus real-time data integration. It’s very common to integrate source data via batch processing but as the hub evolves, there will be more need for real-time availability of data.

For real-time data integration, it is better to leverage integration tools based on an Enterprise Service Bus (ESB) and / or Service Oriented Architecture (SOA).

Define clear steps to prepare the data, the processing that needs to happen, and the volume of data flowing though the solution on a daily basis. Design the ETL solution as generically as possible so that new sources can be easily integrated or with minimal changes. If the groundwork is flawed, your future integration tasks will become difficult and time-consuming.

Designing an extensible master data repository

I listed some of the key master data management functionalities on my blog sometime ago. The following points should be closely looked at by the architect while designing the master data tools and technologies:

[icon_list style=”star”]

- A flexible data model is a key feature of MDM product you choose. Depending on the domain implemented, there will be changes to the model. Many vendors provide a pre-defined model but the chances are you will have to modify it to meet specific needs. Choose these modifications carefully, keeping in mind the long term usage of the data elements.

- Multi-domain implementations have become very common recently. Usually, you’ll be implementing at least two or three domains together (customer-location-account, customer-location-product, product-location etc). Design an approach that supports easier management of these domains and the complex relationships between them.

- Security is an important aspect of the solution. As MDM is an enterprise-wide solution, it has to support integration with the existing security registries of the organization.

- Audit functionality is a very important requirement both for capturing historical values of master data as well as regulatory compliances. Design your solution to capture WHO – WHEN – WHY changed the data. Track changes coming from source systems or individual users and build cross references to each connected system.

- Implement maintenance processes to control the quality of data flowing into and out of MDM. Data stewards play a key role here in identifying incorrect data, and for authorization and correction so as to ensure that high quality data is delivered to outbound channels. Your solution should provide interfaces and tools to support the stewards in effectively identifying issues and correcting them easily. This is a challenging task and often depends on the flexibility offered by the underlying hub product.

- The architect should carefully look into the non-functional requirements (NFR) such as scalability, performance, security, maintenance, and upgradability of the product. These NFRs will always co-exist with the functional requirements of the organization to help achieve the desired end state. If the hub is not engineered well to handle increasing workload requirements, it’s not going to live long in the organization.

[/icon_list]

Robust data matching and survivorship functionality

A powerful and effective fuzzy matching engine is a must-have to eliminate duplicate or redundant data. If you look at the matching capabilities of the MDM tools available in the market, most of them do a great job of identifying duplicate records. However, the tools are not simple to use because of the complex matching criteria used in every implementation.

Although certain commonalities exist among organizations, there are always variations to the rules used to compare data elements.

The first challenge is to determine if there are matches. Figure out the business definition of ‘a match’ in your organization. To define this, you will have to come up with a list of critical elements which are necessary for matching. Next, you have to assign weights to these elements. For example a phone number match is given more weight than a name match or a date of birth match. Many MDM products come with certain pre-defined matching algorithms and your best bet is to start from that and modify it to your specific scenario. Careful consideration of algorithm, testing and sampling are the keys here.

One of the questions I often face is the choice of using deterministic matching versus probabilistic (fuzzy) matching. Believe it or not, there are many organizations that feel more comfortable using deterministic, although it’s not as efficient and realistic as probabilistic matching is.

Choose the right option that fits into the customer requirements. For example: if the customer says “we want to make sure that Tax ID is exactly the same to ensure we have a duplicate record”, you need to design your matching rules to accommodate this.

Data survivorship is another major puzzle. You will have to find the best answers for the following questions when you merge two or more duplicate records:

[icon_list style=”star”]

- What data elements survive when you do the merge?

- How to synchronize this data with the source systems so that everyone producing the data knows about this change.

- How to handle scenarios when two non-duplicates are merged. In other words, the false positive matches which result in a merge. False positives in reality are almost un-acceptable while false negatives, while unfortunate, are acceptable, providing they seldom occur. The solution has to address a way to ‘un-do’ the merges that happen accidentally.

[/icon_list]

Seamless synchronization of master data

Architects spend enormous time on system integration. No exceptions here. MDM must seamlessly integrate with a variety of applications. Whether it’s the system that’s responsible for data entry, or the data warehouse or the business intelligence system, MDM should bring the right information to the right person at the right time.

Realistically speaking, no MDM solution will replace all the sources of enterprise information overnight. It’s a continuously evolving process. Keeping this in mind, the architecture you design should allow other sources of master data to leverage the features of MDM as soon as possible.

Here are some of the important architectural concerns that need to be nailed down:

[icon_list style=”star”]

- Automation and quick synchronization of data ensuring higher data quality is maintained between transitions.

- When duplicate records are consolidated, find out a way to synchronize this update with the sources while they still exist. Although the hub architecture leads to an eventual single view of master data entities across the organization, this is a long-term affair. To show the value added by MDM, you’ll have to allow two-way communication between MDM and existing sources of master data.

- The frequency at which data flows into the downstream applications like enterprise data warehouse, business intelligence and data marts should be thought through. Although most of these applications need delta changes on at least a daily basis, some may require real-time updates.

- Availability of key data elements (typically via a web service interface) to the business users and data stewards for deeper analysis.

- Knowing which type of data transfer mechanism fits a given data integration point is critical. Notification mechanisms can be asynchronous (most of the time) which helps in completing MDM transactions faster.

[/icon_list]

There are many instances where solutions get architected poorly and thus add more time and effort in realizing the full potential of MDM. I hope the points discussed here will help you architect a more robust solution.

COMMENTS

Leave A Comment

RECENT POSTS

Composable Applications Explained: What They Are and Why They Matter

Composable applications are customized solutions created using modular services as the building blocks. Like how...

Is ChatGPT a Preview to the Future of Astounding AI Innovations?

By now, you’ve probably heard about ChatGPT. If you haven’t kept up all the latest...

How MDM Can Help Find Jobs, Provide Better Care, and Deliver Unique Shopping Experiences

Industrial data is doubling roughly every two years. In 2021, industries created, captured, copied, and...

I’d be inclined to show the data quality/cleansing tool as a separate component in the diagram because neither a data profiling or integration tool have the powerful features of leading data quality tools.Obviously some cleansing can be done with data integration tools but I’d think that integration is separate from the data cleansing/quality tools.

For instance, data quality tools such as Trillium are great at enrichment – a feature which is missing in the diagram. Similarly, the business rules component and data synchronisation components are missing.

I’d also think that the BI tool is more likely to get it’s data from a EDW. I may use master data as well, but given the nature of BI, EDW would be a more likely candidate for BI and reporting needs. Reports would be generated from the EDW, BI and maybe even MDM such as for profiling purposes.

Majeet, Thanks for commenting.

I agree with you on the enhancements you are asking to this diagram. EDW is the primary source catering to BI applications because of its nature of storing transactional data which we do not store in MDM. But MDM is the backbone which caters core master data entities required to EDW and BI applications.

As I mentioned in my replay to your my original post on @HubDesigns magazine, the focus of this article is the architecture required to create MDM system. Keeping that in mind, I categorically represented the data flows in this diagram. (More like at 100 ft view). I will address the actual flow in a future article.

[…] wrote about this step in my earlier blog on five key factors in architecting a master data solution. Missing this step is sure sign of failure and will lead to data quality issues getting replicated […]

Brilliant article outlining very important matters in an MDM implementation. In most cases , at least that I have been involved in , teams become so focused on their solution silos be it ETL, Hub Development, Profiling and tend to lose focus on the ultimate objective. So the Architect has to play that role in conscientising and ensuring consistent alignment of the various teams towards the ultimate objective.

Great blog and writeup. Be good to see if things have move on since then the first writeup. What are the current MDM solutions out there in the context of Cloud based technologies such as SaaS.

[…] to the world of Composable Enterprise—an architecture where you can take a modular approach to build loosely coupled application capabilities and bundle […]